2. Detecting facial expressions from videos#

Written by Jin Hyun Cheong and Eshin Jolly

In this tutorial we’ll explore how to use the Detector class to process video files. You can try it out interactively in Google Collab:

# Uncomment the line below and run this only if you're using Google Collab

# !pip install -q py-feat

2.1 Setting up the Detector#

We’ll begin by creating a new Detector instance just like the previous tutorial and using the defaults:

from feat import Detector

detector = Detector()

detector

Detector(face_model=img2pose, landmark_model=mobilefacenet, au_model=xgb, emotion_model=resmasknet, facepose_model=img2pose, identity_model=facenet)

2.2 Processing videos#

Detecting facial expressions in videos is easy to do using the .detect_video() method. This sample video included in Py-Feat is by Wolfgang Langer from Pexels.

from feat.utils.io import get_test_data_path

import os

test_data_dir = get_test_data_path()

test_video_path = os.path.join(test_data_dir, "WolfgangLanger_Pexels.mp4")

# Show video

from IPython.core.display import Video

Video(test_video_path, embed=False)

Just like before we can call the .detect() method, but this time we tell Py-feat that data_type='video'.

Here we also set skip_frames=24 which tells the detector to process only every 24th frame for the sake of speed.

We also set face_detection_threshold=0.95 which tells the detector to be extremely conservative in what it considers a face. Since we already know that this video is a continuous front-on shot of one person, raising this value from the default of 0.5 will result in a much fewer false positive detections of more than one face per frame.

video_prediction = detector.detect(

test_video_path, data_type="video", skip_frames=24, face_detection_threshold=0.95

)

video_prediction.head()

0%| | 0/20 [00:00<?, ?it/s]

100%|██████████| 20/20 [00:14<00:00, 1.34it/s]

| FaceRectX | FaceRectY | FaceRectWidth | FaceRectHeight | FaceScore | x_0 | x_1 | x_2 | x_3 | x_4 | ... | Identity_509 | Identity_510 | Identity_511 | Identity_512 | input | frame | FrameHeight | FrameWidth | approx_time | Identity | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 307.0 | 33.0 | 269.0 | 327.0 | 0.997972 | 330.422302 | 333.200439 | 338.313690 | 346.049591 | 357.961914 | ... | -0.047752 | -0.006772 | 0.013241 | -0.006375 | /Users/esh/Documents/pypackages/py-feat/feat/t... | 0 | 360.0 | 640.0 | 00:00 | Person_0 |

| 1 | 329.0 | 46.0 | 253.0 | 314.0 | 0.997641 | 346.127106 | 347.800049 | 351.786346 | 358.154510 | 369.051941 | ... | -0.076541 | 0.063415 | 0.017766 | -0.007070 | /Users/esh/Documents/pypackages/py-feat/feat/t... | 24 | 360.0 | 640.0 | 00:01 | Person_1 |

| 2 | 314.0 | 21.0 | 258.0 | 336.0 | 0.997934 | 340.927063 | 341.121582 | 343.485046 | 347.379150 | 356.129974 | ... | -0.066493 | 0.015429 | 0.023260 | 0.014124 | /Users/esh/Documents/pypackages/py-feat/feat/t... | 48 | 360.0 | 640.0 | 00:02 | Person_2 |

| 3 | 314.0 | 70.0 | 239.0 | 290.0 | 0.985917 | 315.397034 | 315.924896 | 318.986115 | 325.221039 | 335.382019 | ... | -0.057963 | 0.047913 | 0.042580 | 0.043727 | /Users/esh/Documents/pypackages/py-feat/feat/t... | 72 | 360.0 | 640.0 | 00:03 | Person_3 |

| 4 | NaN | NaN | NaN | NaN | 0.000000 | NaN | NaN | NaN | NaN | NaN | ... | 0.010204 | -0.039508 | 0.009879 | -0.003504 | /Users/esh/Documents/pypackages/py-feat/feat/t... | 96 | 360.0 | 640.0 | 00:04 | Person_4 |

5 rows × 692 columns

We can see that our 20s long video, recorded at 24 frames-per-second, produces 20 predictions because we set skip_frames=24:

video_prediction.shape

(20, 692)

2.3 Visualizing predictions#

You can also plot the detection results from a video. The frames are not extracted from the video (that will result in thousands of images) so the visualization only shows the detected face without the underlying image.

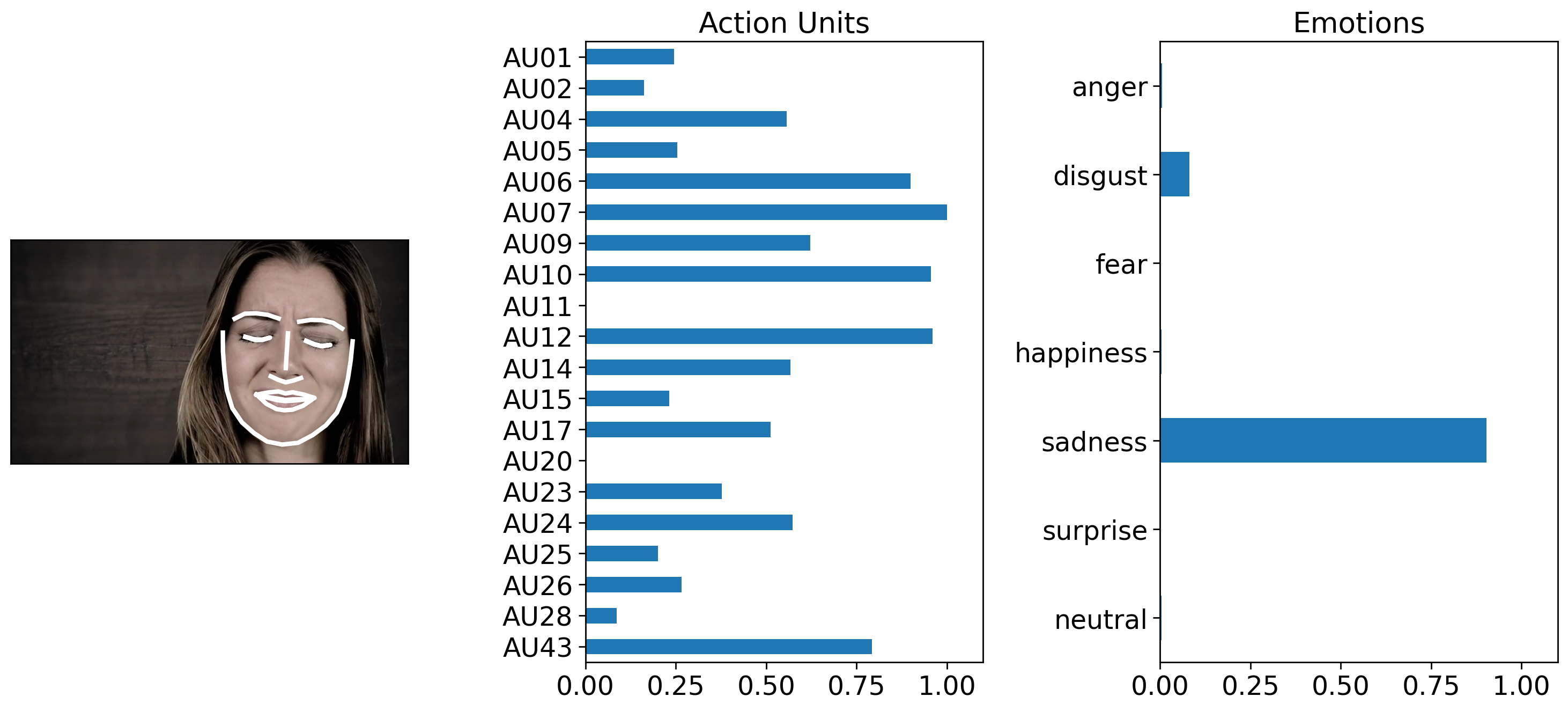

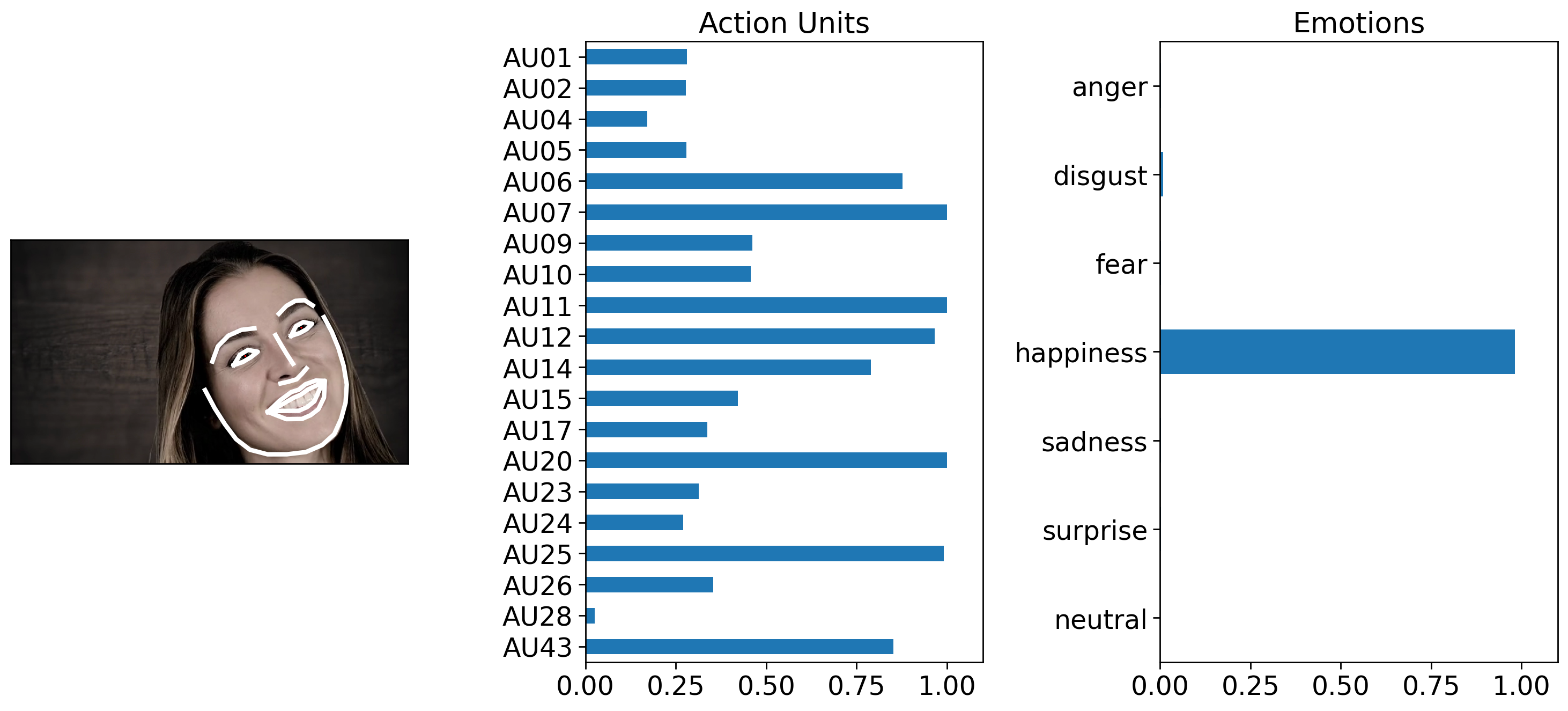

The video has 24 fps and the actress show sadness around the 0:02, and happiness at 0:14 seconds.

# Frame 48 = ~0:02

# Frame 408 = ~0:14

video_prediction.query("frame in [48, 408]").plot_detections(

faceboxes=False, add_titles=False

)

[<Figure size 1500x700 with 3 Axes>, <Figure size 1500x700 with 3 Axes>]

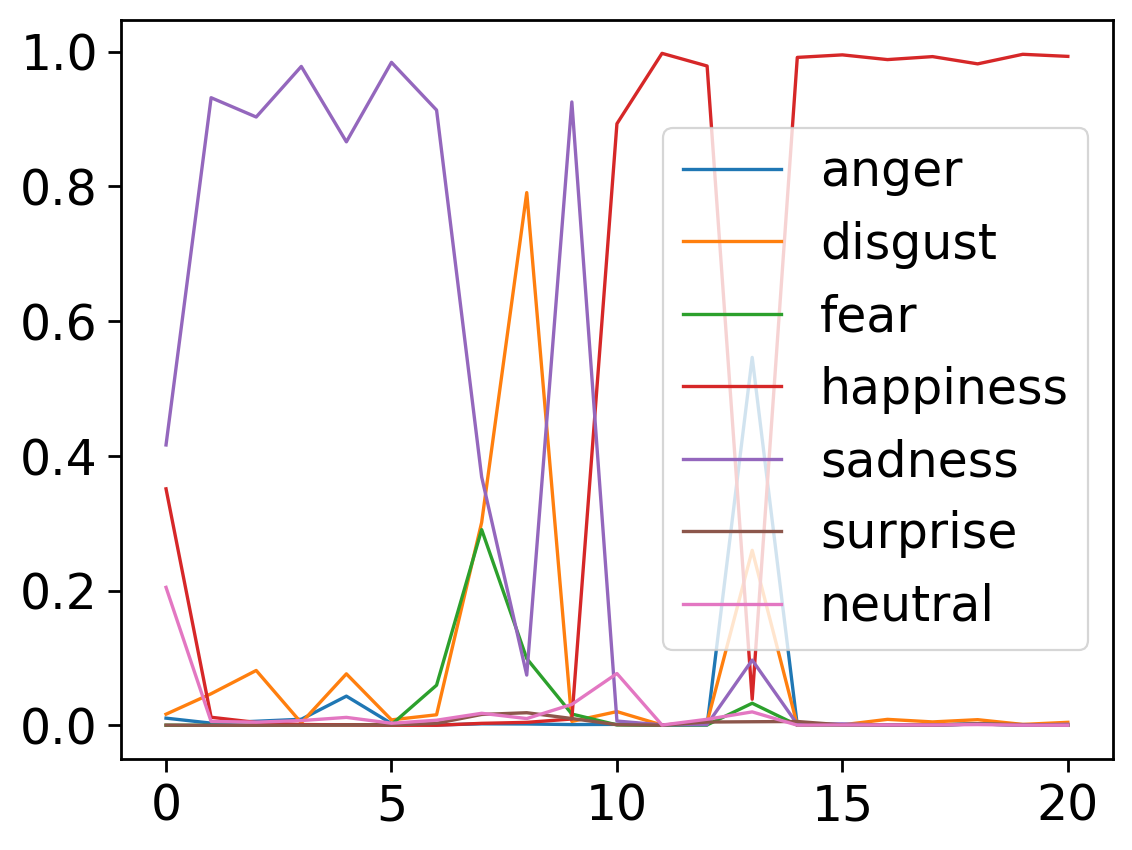

We can also leverage existing pandas plotting functions to show how emotions unfold over time. We can clearly see how her emotions change from sadness to happiness.

axes = video_prediction.emotions.plot()